Docker is just a simple process

This week I have been discussing with a lot of friends about Docker, a word that turned very popular in 2014 and consolidated with the time nowadays a buzzword that is always present in our dev technical discussions and meetings, everybody knows about how to define a Dockerfile, the usage and more but containers technologies are nothing new in the past container technology appeared with the chroot implementation for the first time in 1979 introduced during the development of Version 7 Unix[1], later on, Freebsd in 2000 introduced the concept of Jails, build upon the chroot concept, which is used to change the root directory of a set of processes. This creates a safe environment, separate from the rest of the system. Processes created in the chrooted environment can not access files or resources outside of it.

When discussing this not everybody knows that a docker container in a running state is just as simple as a single Linux/Unix process with an isolated view of the underlying host and what Docker Inc did was to allow easily the usage of complex mechanisms and features that the kernel provides using a rest API expose through a local socket and a client, features like:

- Namespaces

- Capabilities

- Cgroups

- SELinux/Apparmor

- seccomp

But the work behind the idea of packaging software into standardized units for development, shipment, and deployment is brilliant and very cool for the …..ops teams, right now we don’t even need to think about worry about the binaries or dependencies doing painful installations because everything is packaged. As everything is a process let’s run a couple of experiments.

For the experiments I will be using the nginx docker image. If we look up for one nginx process inside our host, we noticed that we don’t get anything at all, by the way I’m using Vagrant with a base image of ubuntu focal because Docker on macOS works differently, so let’s run the test:

vagrant@containers:~$ ps -ef | grep nginx

vagrant 4961 4777 0 17:28 pts/0 00:00:00 grep --color=auto nginx

Now if we run our container with name experiment if it’s the first time docker is going to download the base image from a default registry, in this case docker hub, also we want to keep running this container in detached mode(background) we use the option-d as it is shown below:

vagrant@containers:~$ docker run -d --name experiment nginx:latest

Unable to find image 'nginx:latest' locally

latest: Pulling from library/nginx

bd159e379b3b: Pull complete

8d634ce99fb9: Pull complete

98b0bbcc0ec6: Pull complete

6ab6a6301bde: Pull complete

f5d8edcd47b1: Pull complete

fe24ce36f968: Pull complete

Digest: sha256:2f770d2fe27bc85f68fd7fe6a63900ef7076bc703022fe81b980377fe3d27b70

Status: Downloaded newer image for nginx:latest

6996e227807af0fd5383078f87404b19a64cb0b00aadef84a772336c40d777a8

Checking the output of docker process we are able to see that we got a container in a running state:

vagrant@containers:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6996e227807a nginx:latest "/docker-entrypoint.…" 18 seconds ago Up 17 seconds 80/tcp experiment

and if we look up again with the ps command we are going to see that there are 3 process one the master process of nginx and the number of workers that are available in the nginx configuration:

vagrant@containers:/proc/5233$ ps -ef | grep -i nginx

root 4957 4933 0 17:55 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 5028 4957 0 17:55 ? 00:00:00 nginx: worker process

systemd+ 5029 4957 0 17:55 ? 00:00:00 nginx: worker process

vagrant 5066 4641 0 17:55 pts/0 00:00:00 grep --color=auto -i nginx

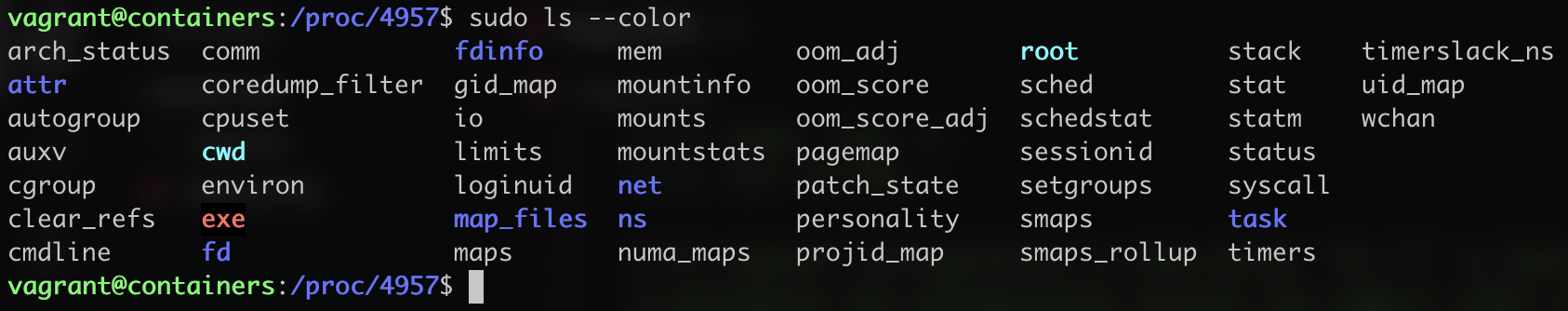

So process 4957 is our parent process, and I can also validate the theory creating a single file in the root directory of the container itself:

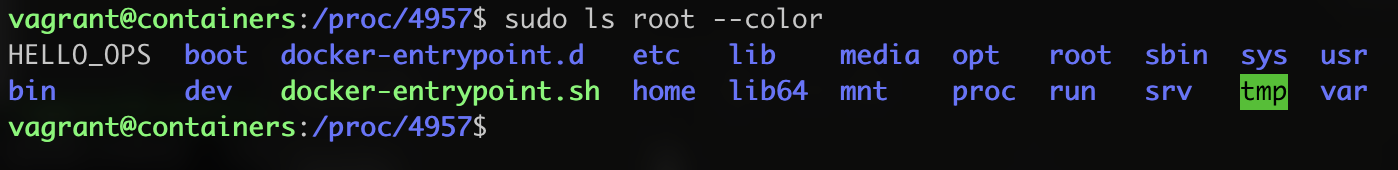

vagrant@containers:/proc/5233$ docker exec experiment touch /HELLO_OPS

if we run an interactive shell against the nginx running container and navigate to the root directory we will see the file, but as I mentioned this is also possible to check in the filesystem of the underlying host so we can use the number of the master process to check as follow:

Filesystem structure of our nginx process

looking the previous result, it is possible to check the fs of the container :

Root Filesystem structure

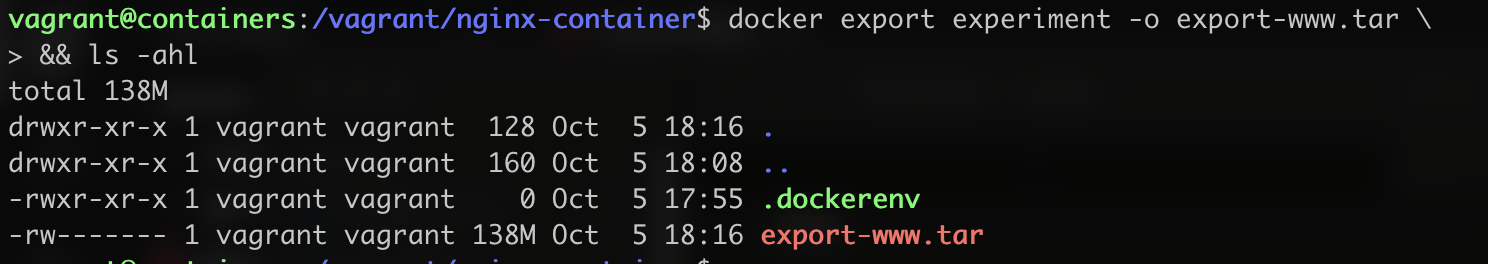

There we have the file that I created HELLO_OPS. We also have the option to export the running container to a tar file using the export subcommand.

There is a short an interesting article Vladislav where he explain the composition of a Dockerfile and the meaning of the layers, if we do a tarball extract we are going to get all the filesystem.

So the next time they ask you about docker and containers remember, they’re a bunch of compressed tarballs and processes 😄

Readme in the next one 😃

H 🚀

PD: If you want to run this experiments and more, you can use my vagrant configuration: https://gitlab.com/-/snippets/2422731

References

[1] https://archive.org/details/byte-magazine-1983-10/page/n497/mode/2up?view=theater